Using AI to Write Web Scrapers

I have 5000 memes in my “Saved” collection on Instagram. There’s no reason I need to download them, but it might be fun to look back in 5 years. I could also make compilations to upload or do whatever else.

So, after considering different approaches and investigating what HTML Instagram serves on page load, I decided we would scroll the Saved page on desktop, wait n seconds, see if page height changed, if it did, continue, else, try to scroll again and check, failing after three scrolls with no height changes. I forget exactly how it grabs the link but here’s the chunk of Javascript I used (run it in web console/dev tools)

Here it is:

(async function scrapeSavedPosts() {

const POST_PATH_RE = /^\/p\/[^/]+\/?$/;

const baseUrl = "https://www.instagram.com";

function extractPostLinks() {

const anchors = document.querySelectorAll("a[href]");

const links = Array.from(anchors)

.map(a => a.getAttribute("href"))

.filter(href => href && POST_PATH_RE.test(href));

return new Set(links);

}

function getRandomDelay(minMs, maxMs) {

return Math.floor(Math.random() * (maxMs - minMs + 1)) + minMs;

}

async function scrollOnce() {

window.scrollTo(0, document.body.scrollHeight);

const delay = getRandomDelay(5000, 10000); // 5 to 10 seconds now

console.log(`Waiting ${delay} ms for posts to load...`);

await new Promise(resolve => setTimeout(resolve, delay));

}

let previousCount = 0;

let allLinks = new Set();

let previousHeight = document.body.scrollHeight;

let noChangeCount = 0;

const maxNoChangeAttempts = 3;

while (true) {

const currentLinks = extractPostLinks();

currentLinks.forEach(link => allLinks.add(link));

if (allLinks.size === previousCount) {

console.log(`No new posts detected after last scroll.`);

} else {

previousCount = allLinks.size;

}

await scrollOnce();

const currentHeight = document.body.scrollHeight;

if (currentHeight > previousHeight) {

console.log(`Page height increased from ${previousHeight} to ${currentHeight}. Continuing...`);

previousHeight = currentHeight;

noChangeCount = 0;

} else {

noChangeCount++;

console.log(`Page height did NOT increase. Attempt ${noChangeCount} of ${maxNoChangeAttempts}.`);

if (noChangeCount >= maxNoChangeAttempts) {

console.log(`Reached max attempts without height increase. Stopping scroll.`);

break;

}

}

}

const fullUrls = Array.from(allLinks).map(p => baseUrl + p);

const textOutput = fullUrls.join("\n");

console.log(textOutput);

// Create and trigger download of the .txt file

const blob = new Blob([textOutput], { type: "text/plain" });

const url = URL.createObjectURL(blob);

const a = document.createElement("a");

a.href = url;

a.download = "instagram_saved_posts.txt";

document.body.appendChild(a);

a.click();

// Clean up

setTimeout(() => {

document.body.removeChild(a);

URL.revokeObjectURL(url);

}, 100);

})();

Warning that I did receive a warning for potentially automated activity when I logged out and in for something else. I don’t know if this script caused that or my multiple failed downloads later on.

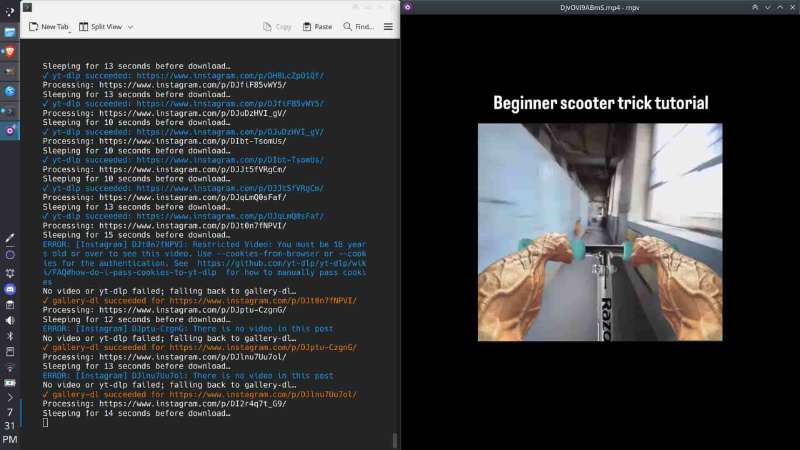

Ok, so we have a nice txt file with 5k Instagram links. Let’s try to use yt-dlp to download them all…

Nope. yt-dlp can’t download images.

Ok, maybe when we come upon a link that yt-dlp fails on, we can download the HTML and grep out the image link.

There are several scontent image links in each HTML file and the HTML files are very long. This isn’t ideal, and even with chatGPT correctly identifying the syntax for what is (probably) the full size post image, there’s a risk of downloading other junk like profile pictures or low res photos.

This caused a long detour of messing around with various Instagram libraries like Instaloader that ultimately did not work even with cookies.

Eventually, I found gallery-dl. As of now, you can’t pass login details to it, you need to create a cookies.txt with your Instagram cookies in it (use this extension) and once I did that it worked ok. The syntax is a little weird, these downloads all have their own quirks about shortcodes or full urls being accepted ETC.

So we have a way to download images and videos, we just need our master script to handle this, manage an archive of what’s been processed (yt-dlp supports this but IDK if gallery-dl does) and add in some delays so hopefully we don’t get banned. Script:

#!/usr/bin/env bash

# -------------------------------------------------------------

# Instagram Media Downloader – flat "Images" output

# -------------------------------------------------------------

# Reads a list of Instagram post URLs from a text file, tries to

# download any videos with yt‑dlp, and falls back to gallery‑dl for

# images. All media files land directly in ./Images with simple

# filenames (no nested username folders).

# -------------------------------------------------------------

INPUT_FILE="$1"

ARCHIVE_FILE="processed.txt"

BLUE='\033[0;34m'

YELLOW='\033[0;33m'

GREEN='\033[0;32m'

NC='\033[0m' # no color

if [[ -z "$INPUT_FILE" || ! -f "$INPUT_FILE" ]]; then

echo "Usage: $0 urls.txt" >&2

exit 1

fi

# Ensure output directories exist

mkdir -p Images Videos

extract_shortcode() {

# Pull the shortcode out of /p/<shortcode>/ in the URL

local url="$1"

echo "$url" | awk -F"/" '{for(i=1;i<=NF;i++) if ($i=="p") print $(i+1)}'

}

colorize_output() {

local color="$1"

while IFS= read -r line; do

echo -e "${color}${line}${NC}"

done

}

touch "$ARCHIVE_FILE"

while IFS= read -r url || [[ -n "$url" ]]; do

# Skip empty lines or comments

[[ -z "$url" || "$url" =~ ^# ]] && continue

shortcode=$(extract_shortcode "$url")

# Skip already‑processed entries (by full URL or shortcode)

if grep -qxF "$url" "$ARCHIVE_FILE" || grep -qxF "$shortcode" "$ARCHIVE_FILE"; then

echo "Skipping already processed: $url"

continue

fi

echo "Processing: $url"

# Respectful delay 10‑15 s

sleep_time=$((RANDOM % 6 + 10))

echo "Sleeping for $sleep_time seconds before download…"

sleep $sleep_time

# ---------------------------------------------------------

# 1) Attempt to grab video with yt‑dlp

# ---------------------------------------------------------

yt_dlp_output=$(mktemp)

yt-dlp --ignore-config --output 'Videos/%(id)s.%(ext)s' \

--progress --quiet --ignore-errors "$url" 2>&1 | tee "$yt_dlp_output" | colorize_output "$BLUE"

yt_status=${PIPESTATUS[0]}

if [[ $yt_status -eq 0 && ! $(<"$yt_dlp_output") =~ "There is no video in this post" ]]; then

echo -e "${BLUE}✓ yt-dlp succeeded: $url${NC}"

# Find and echo the downloaded video files

find Videos -name "*${shortcode}*" -type f -newer "$ARCHIVE_FILE" 2>/dev/null | while read -r video_file; do

echo -e "${GREEN}Saved: $(realpath "$video_file")${NC}"

done

echo "$url" >> "$ARCHIVE_FILE"

rm "$yt_dlp_output"

continue # Done with this URL

fi

rm "$yt_dlp_output"

echo "No video or yt‑dlp failed; falling back to gallery‑dl…"

# ---------------------------------------------------------

# 2) Download images with gallery‑dl to temp dir, then move

# ---------------------------------------------------------

temp_dir=$(mktemp -d)

# Use shortcode as filename since {id} is returning None

if gallery-dl --cookies insta.txt --dest "$temp_dir" \

-o extractor.instagram.filename="{shortcode}_{num}.{extension}" \

--quiet "$url" 2>&1 | colorize_output "$YELLOW"; then

echo -e "${YELLOW}✓ gallery-dl succeeded for $url${NC}"

# Move all downloaded files to Images directory and echo paths

find "$temp_dir" -type f \( -name "*.jpg" -o -name "*.jpeg" -o -name "*.png" -o -name "*.gif" -o -name "*.webp" \) | while read -r file; do

filename=$(basename "$file")

# If filename is still problematic, use shortcode as fallback

if [[ "$filename" =~ ^None\. || "$filename" =~ ^_\. ]]; then

extension="${filename##*.}"

filename="${shortcode}.${extension}"

fi

dest_path="./Images/$filename"

mv "$file" "$dest_path"

echo -e "${GREEN}Saved: $(realpath "$dest_path")${NC}"

done

echo "$url" >> "$ARCHIVE_FILE"

else

echo "✗ gallery-dl failed for $url"

fi

# Clean up temp directory

rm -rf "$temp_dir"

done < "$INPUT_FILE"

echo "All done."

Don’t @ me, I’m sure there are a billion problems with this including using stout and stderr for coloring output but I DON’T CARE. It works. Oh yeah, where it saves images is super weird, again don’t @ me go put it in chatGPT and fix it yourself

So, overall requirements:

-

text file with Instagram links

-

gallery-dl, yt-dlp

-

cookies.txt for gallery dl (and Instagram if you want age restricted videos saved)